Business leaders grappling with growth imperatives and economic uncertainty find that generative AI promises new ways to engage customers, reduce costs, and offer products. It is another huge disruption to contend with – as well as an opportunity to harness a competitive edge.

We hear similar questions across different types of companies: Where to apply generative AI? How to meaningfully improve business results? How to make it work while limiting risks? Our experience guiding clients from business case through to results shows patterns for realizing meaningful benefits.

Generative AI – capabilities and limitations

A high-level understanding of Generative AI's capabilities and limitations provides the basis for answering these questions.

The technology functions by associating a prompt or question with related data in a knowledge base. The knowledge base can include text, software code, imagery, video, or audio. Generative AI models map the probability that a data point in the knowledge base is related to another data point. For example, a large language model like ChatGPT measures the strength of relationships of words and sets of words to find related groups of words.

Generative AI uses maps of data in a knowledge base to:

- Create text, software code, music, and video

- Tailor the tone of a message or take on a persona

- Search based on concepts (semantic search)

- Translate, including from one programming language to another

- Find grammar issues in text and bugs in code

- Summarize

- Transcribe

The process of finding related data in a knowledge base highlights generative AI's key limitation: it repeats patterns without understanding why they exist. Generative AI has been likened to a "stochastic parrot" that answers questions but lacks awareness of why an answer does or does not work.

Generative AI models' knowledge also ends with the last date of their training data. Large language models like BERT, ChatGPT, and LLaMA are trained with data such as the entire text of Wikipedia or top news web sites. They consist of hundreds of terabytes of data and cost millions to train, and so are not continually updated. For example, without plugins, ChatGPT includes knowledge only through September 2021.

However, injecting recent data alongside prompts can overcome this limitation. Referred to as REALM – for Retrieval Augmented Language Models – this approach pairs prompts with search engine results, recent company data, or other data in real time.

Where to apply generative AI

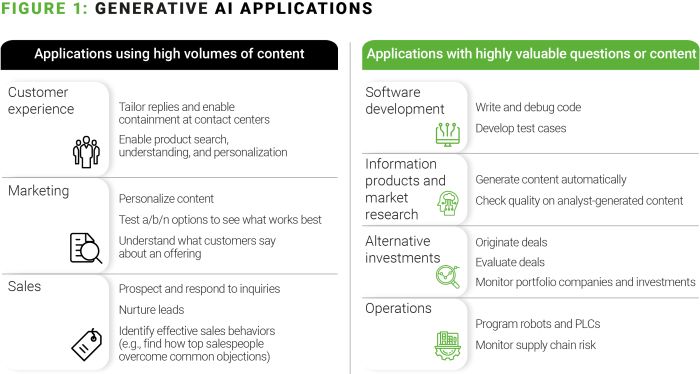

Generative AI delivers the best business results in applications where many people use content, or where a few people ask questions with highly valuable answers.

How to improve business results

In the past, computers have served as grunt workers and information gophers – counting inventory, tracking money, or measuring operational performance. However, deployed as knowledge workers and collaborators with humans, they can now realize their true potential and point the way to revelations about the complex systems in which we live and work.1

All AI projects start with understanding data. Fundamentally, AI is simple: models reproduce business decisions embedded in their training data. Our experience across clients shows AI delivers best when understood and supported by customer success, finance, operations, and sales. Functional areas should therefore receive training to understand how models work, contribute to understanding data, and identify how front-line workers can practically monetize models.

This means that business owners, not data science or IT, must take responsibility for designing training data and ensuring it matches ground truths. Front-line workers at dealers, manufacturing plants, or warehouses can then explain the real-world reasons for clusters, outliers, or anomalies in data as well as identify data quality needs.

Creating useful solutions generally starts by using training data to guide prompt tuning. This technique attaches examples of how to answer questions along with a prompt sent to a large language model.

As with any software development, prompts should be rigorously designed and tested. Prompt engineering should reflect "understanding the psychology of a large language model", such as telling a model like ChatGPT to "answer as if you're a world-class expert on this topic". Prompts can include "chain of thought" to explain reasoning, such as "Product 123 is no longer supported because the company does not have replacement parts and so is unable to service the product."

For complex questions or very high volumes of queries, models can use a technique called fine tuning. This approach uses the same base model, customizing how information is fed to the model (heads), weighting within the neural network, and/or how the model selects top answers. This fine-tuning involves higher costs to train and keep underlying infrastructure ready but improves the quality of answers. Fine-tuning also reduces the cost per query because only the prompt is passed to the model and not a set of prompt examples that require additional computation. Fine-tuning can be combined with prompt engineering.

AlixPartners has helped clients through common challenges when moving AI models into production use. These difficulties can be controlled when a business:

- Deploys a limited number of powerful AI models that answer many

questions, avoiding a profusion of models such as for individual

customers or products.

- Tailors models by using scoring with thresholds set through

customer self-service. For example, payments fraud detection can be

tailored for each customer's tolerance for erroneously

declining valid payments (false positives).

- Delineates roles for customer success, data science, and IT so that each focuses (rather than swarms) to onboard new customers or resolve production outages.

Case studies

Recent examples include:

- A grocery wholesaler building a new digital ordering system. The company had found that customers wanted more differentiated product descriptions and useful search functionality. Manually creating descriptions for 140,000 SKUs threatened to affect project deadlines, so the team used GPT4 to generate the new product descriptions, saving over 2,500 hours of effort.

- A mid-sized manufacturer of RV parts

seeking to reduce contact center costs. The

business first analyzed customer inquiries to find the top examples

of first call resolution and time to resolution. They then used

voice-to-text translation to turn these customer inquiries into

prompts for Azure Open AI. The solution provides suggested replies

to customer service representatives and the manufacturer found an

immediate average reduction of call times by 1 minute across the

800,000+ calls per year. Additionally, customers increasingly use a

chatbot.

- A market research provider using generative AI to grow revenue faster than the cost of hiring analysts. The company is deploying AI, together with robotic process automation (RPA), to understand open-source data, generate elements of research products, and identify where customers ask similar questions to equip analysts to efficiently respond.

How to contain risks

Risks specific to generative AI include incorrect answers or "hallucinations", biased or toxic content, data security, and sustainability. Achieving results and containing risks are intertwined: risks are generally contained by effectively applying the same approaches that best deliver business outcomes. This starts with building understanding and support across a business.

Forming teams across business areas brings awareness and an understanding of risks from the beginning. A customer-facing solution, for example, should be shaped by customer success, finance, product, and sales rather than mostly data science or IT. If needed, compliance and legal should engage from the start. Business ownership will lead to ROI and risk containment.

An explanation capability can combat potential risks and help deliver the promised value on investments. AI explanation makes the inner workings of models understandable, creates explanatory interfaces to expose and enable correction of biases in model outputs, sets boundaries for the safe application of an AI model, and articulates a model's value for different stakeholders.2

Critically, too, AI solutions often work best in collaboration with people, rather than a robot acting independently. Generative AI should suggest content that is reviewed by a human before it is acted on.

Data security has both myths and actual risks. Prompts sent to cloud-based solutions are not kept and used by cloud providers like Amazon, Google, or Microsoft. Businesses can generally opt out of monitoring and testing. However, individual AI-based productivity tools that create content or check grammar have fewer controls and bring more risks. Company policies, training, and network monitoring can contain these concerns.

Large language models consume considerable power and cooling resources. Environmental impact and costs can be minimized first with efficient prompt tuning. After a model is shown to deliver effective answers, then engineers should try using smaller versions of base models to see if they retain quality. For example, GPT4 (the foundation for ChatGPT) includes five versions with differing capability. This approach also limits costs. Experience shows the effectiveness of generative AI depends more on thoughtful prompt engineering rather than using the latest or largest base models.

What you should do now

- Experimentation: The early phase of generative

AI has brought an explosion of good ideas, and teams should be

encouraged to experiment with them. Creative thinkers who know the

business well and are encouraged to innovate can invent new ways to

create value.

- Data protection and security: While

experimentation should be encouraged, proprietary data must be

safeguarded. Therefore, consider using generative AI services from

large cloud providers, which can bring more controls that are

appropriate for enterprises and regulated industries.

- Education: Learning and development teams can

play a major role in partnership with business and technology to

structure generative AI content and training.

- Consideration of proven use cases: While generative AI is evolving rapidly, some use cases are already proven to deliver strong ROI. Consider using generative AI in contact centers, creative content development, and software development.

In conclusion, business leaders will truly harness the power of generative AI by building understanding across functional areas so that each supports and contributes to the derived solutions. Teams designing these solutions should include front-line workers to better understand the data available and identify how to practically act on models. Aligning horizontally across business functions and vertically from leaders to front-line users will also limit the potential risks and greatly enhance results.

The advent of generative AI represents a seismic disruption to the business world, offering not only huge opportunities but also significant risks. Ultimately, though, it will be inaction in this rapidly evolving environment that proves to be the most significant risk of all.

Footnotes

1. "The Mathematical Corporation: Where Machine

Intelligence + Human Ingenuity Achieve the Impossible",

Sullivan and Zutavern, 2017

2. Building an Artificial Intelligence Explanation Capability, MIS Quarterly Executive, Beath, Someh, Wixom, Zutavern, 2022

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.